DeepSeek-OCR: The SOTA Contexts Optical Compression for Document AI

Unlock unprecedented document understanding and high-speed OCR with the DeepSeek-OCR model. From image-to-markdown to complex table parsing, experience next-generation Contexts Optical Compression.

High-Speed Inference up to 2500 tokens/s on an A100-40G.

from 99+ happy users

Get Started with DeepSeek-OCR

Integrate the model into your workflow in three simple steps:

What is DeepSeek-OCR

DeepSeek-OCR is an advanced Multimodal Large Language Model (MLLM) focused on optimizing the Optical Compression of visual data, pushing the boundaries of Visual-Text Compression for complex documents.

- LLM-Centric VisionA novel approach investigating the role of vision encoders from an LLM-centric viewpoint for better context comprehension.

- Contextual OCR & ParsingGoes beyond simple text recognition to understand document structure, layouts, and contextual meaning.

- Scalable Resolution SupportSupports multiple native and dynamic resolutions to handle diverse document sizes without losing fidelity.

Core Capabilities of DeepSeek-OCR

From high-fidelity text extraction to complex document structure conversion, DeepSeek-OCR handles all your Document AI needs.

Prompting for Powerful Document AI

Leverage DeepSeek-OCR's contextual understanding with specialized prompts.

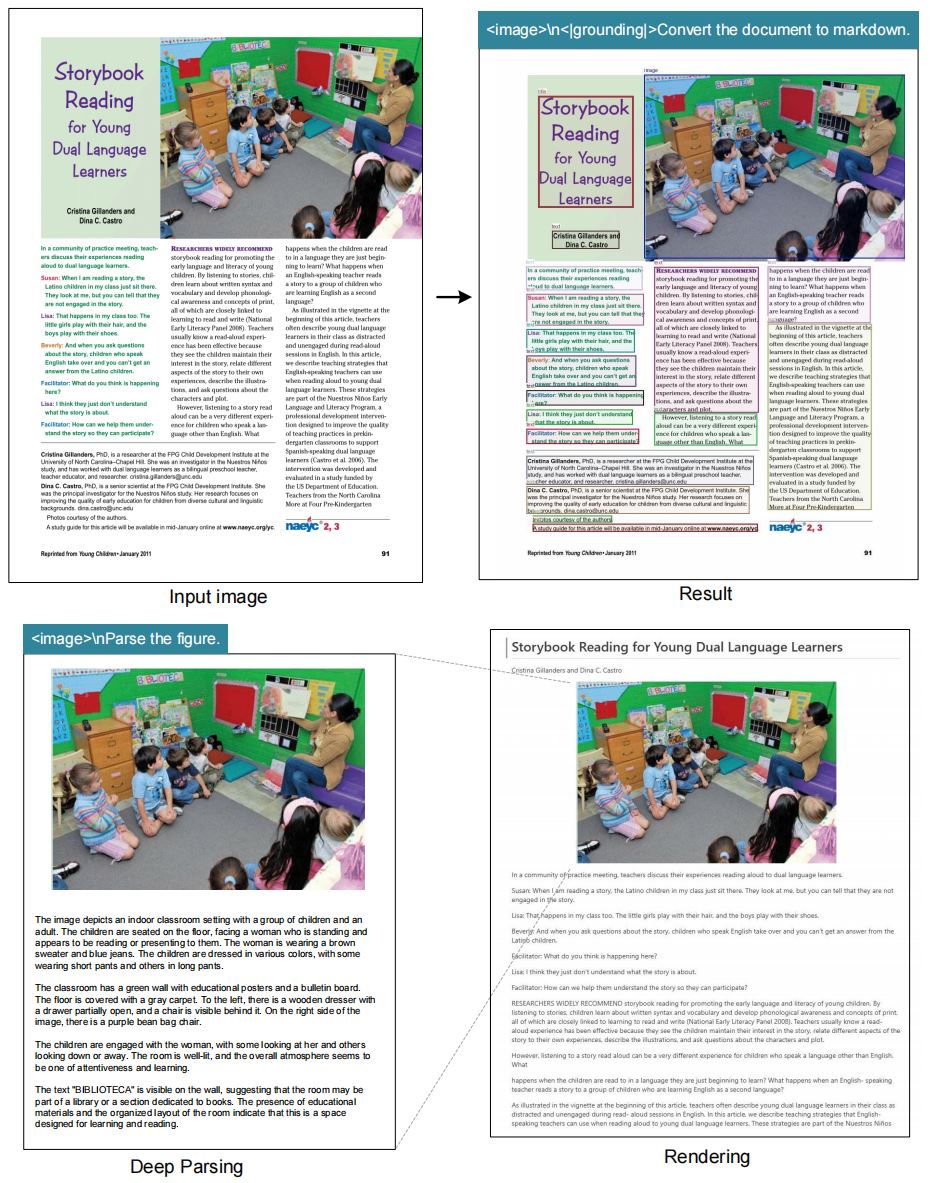

Contextual Document Parsing

Prompt: `<image>\n<|grounding|>Convert the document to markdown.` (Ideal for structured extraction).

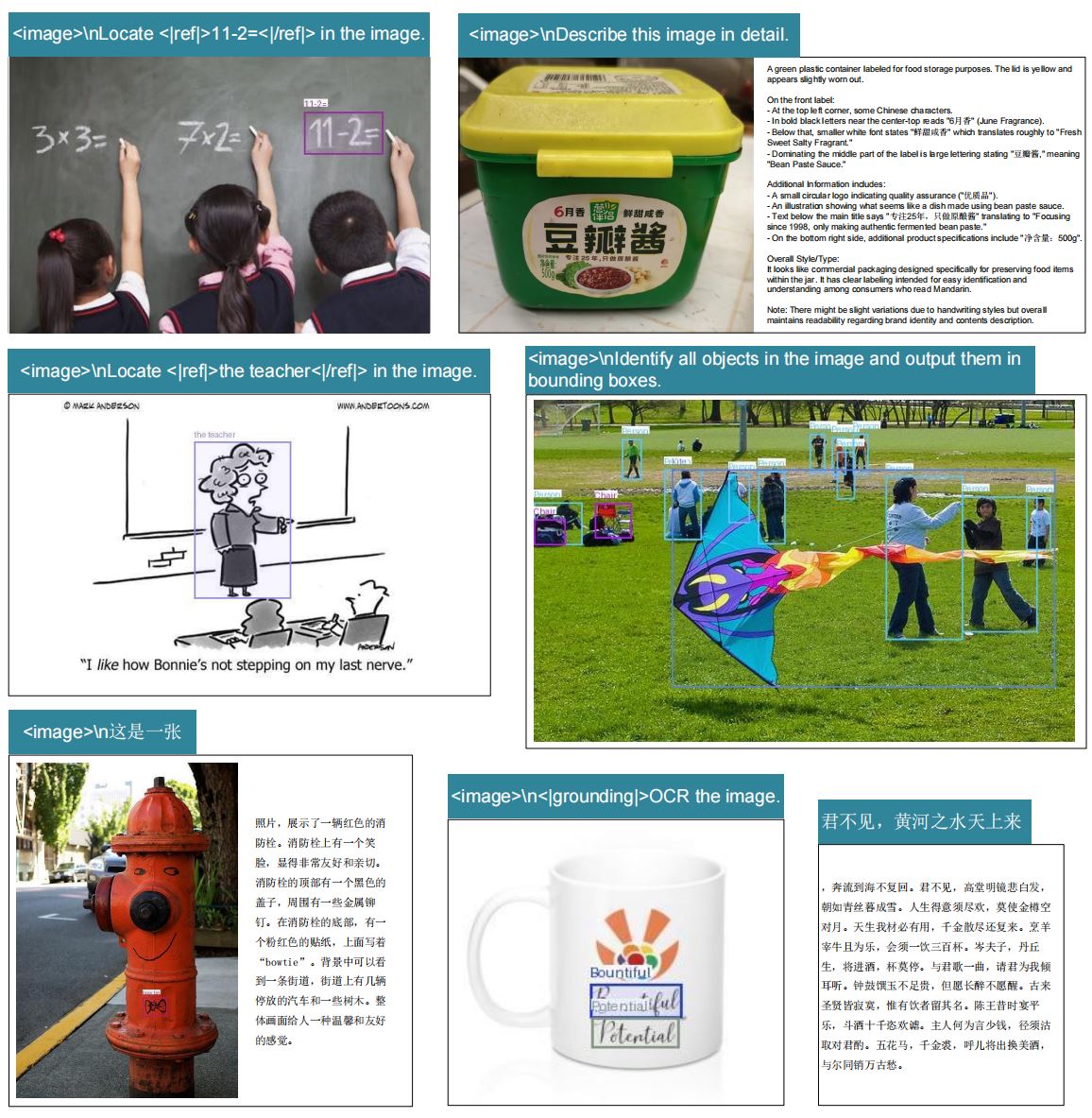

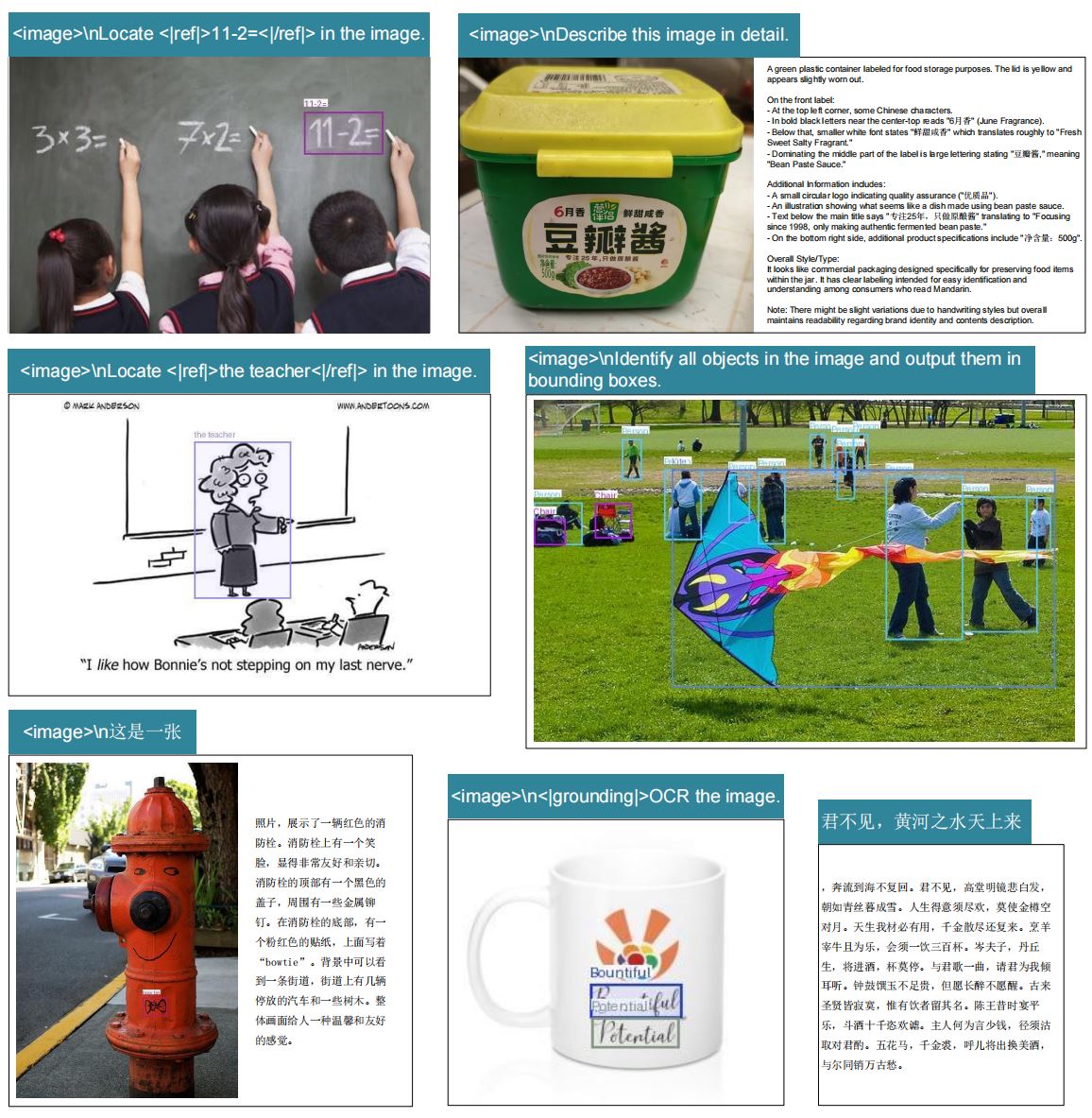

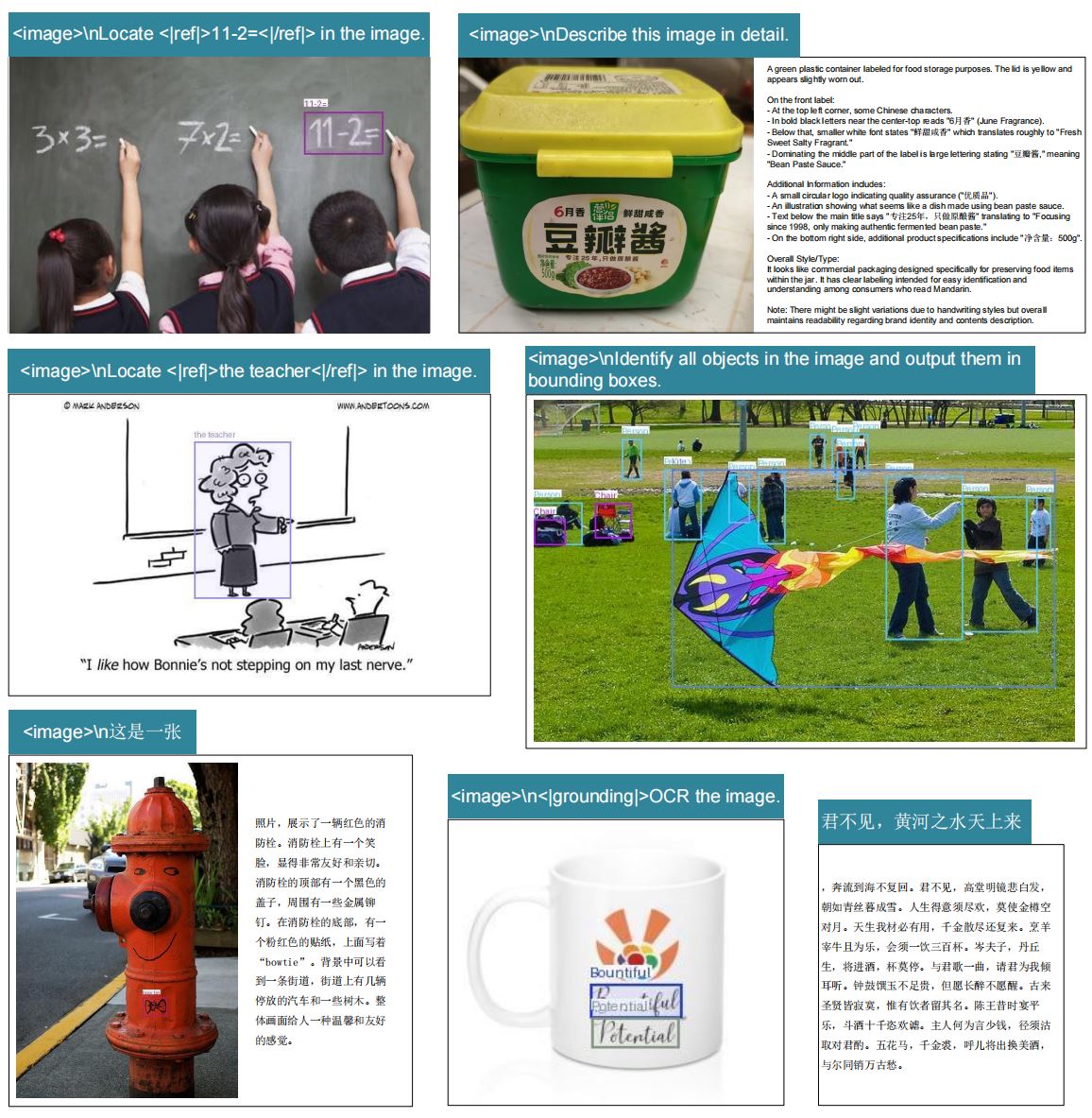

Precise Text Localization (REC)

Prompt: `<image>\nLocate <|ref|>xxxx<|/ref|> in the image.` (For finding specific text blocks).

Freeform/General OCR

Prompt: `<image>\nFree OCR.` (Simple, unconstrained text extraction).

Figure and Chart Analysis

Prompt: `<image>\nParse the figure.` (To understand visual elements within the document).

Detailed Image Description

Prompt: `<image>\nDescribe this image in detail.` (General multimodal capability).

Multilingual Support

Tested on various languages, including complex scripts like Chinese.

Unrivaled OCR Speed and Efficiency

DeepSeek-OCR is engineered for speed and high-volume processing.

Inference Speed

~2500

tokens/s (PDF Concurrency on A100-40G)

Resolution Modes

5+

Supported Resolution Modes (Including Dynamic)

GitHub Stars

7.2k+

Community Support

Frequently Asked Questions About DeepSeek-OCR

For more technical details, please refer to the GitHub repository or the paper.

What is 'Contexts Optical Compression'?

It's the core concept of DeepSeek-OCR, focusing on how a Vision Encoder effectively compresses rich visual context into tokens for the LLM, ensuring maximum fidelity for document understanding.

What hardware is required for high-speed inference?

The vLLM inference shows its best performance on NVIDIA A100-40G or similar high-end GPUs, achieving speeds up to 2500 tokens/s, which is critical for large-scale PDF processing.

Does DeepSeek-OCR support structured data extraction?

Yes. By using the `<|grounding|>Convert the document to markdown.` prompt, the model can effectively extract and structure document content, including tables and lists, into a clean format.

What resolution modes are available?

The model supports Tiny (512×512), Small (640×640), Base (1024×1024), Large (1280×1280) native modes, and a dynamic Gundam mode for flexible and high-fidelity inputs.

How can I contribute or report issues?

You can contribute by forking the official DeepSeek-OCR GitHub repository, submitting Pull Requests, or opening new Issues for bugs and suggestions.

Where can I download the model weights?

The official model weights are hosted on the Hugging Face Hub under the `deepseek-ai/DeepSeek-OCR` repository.

Start Building with DeepSeek-OCR Today

Experience the next-generation performance of Document AI and Contexts Optical Compression.